Team Project (tP):

Week 12 [Mon, Sep 6th] - Project

tP:

- Attend the practical exam dry run During the lecture on Fri, Apr 2nd

- Tweak the product as per peer-testing results

- Draft the PPP

- Double-check RepoSense compatibility

tP: mid-v2.1

1 Attend the practical exam dry run During the lecture on Fri, Apr 2nd

- See info in the panel below:

Admin tP Deliverables → Practical Exam - Dry Run

PE-D Overview

What: The latest release of the v2.0 period is subjected to a round of peer acceptance/system testing, also called the Practical Exam (PE) Dry Run as this round of testing will be similar to the graded

When, where: uses a 40 minute slot at the start of week 11 lecture slot (to be done online).

Since the stated lecture falls on April 2, 2021, which is a public holiday, the PE-D will be conducted on Saturday, April 3, 2021 between 4-6 pm.

PE Overview

Objectives:

- The primary objective of the PE is to increase the rigor of project grading. Assessing most aspects of the project involves an element subjectivity. As the project counts for a large percentage of the final grade, it is not prudent to rely on evaluations of tutors alone as there can be significant variations between how different tutors assess projects. That is why we collect more data points via the PE so as to minimize the chance of your project being affected by evaluator-bias.

- PE is also used to evaluate your manual testing skills, product evaluation skills, effort estimation skills etc.

- Note that significant project components are not graded solely based on peer ratings. Rather, PE data are cross-validated with tutors' grades to identify cases that need further investigation. When peer inputs are used for grading, they are usually combined with tutors' grades with appropriate weight for each. In some cases ratings from team members are given a higher weight compared to ratings from other peers, if that is appropriate.

- PE is not a means of getting you to bring down each other because bringing down anyone is not the goal; it's just a means of identifying as many bugs as possible. No one can sabotage another person or inject bugs into others' products. All anyone can do is to point out potential problems. If those are false positives, no real harm done.

- You are not taking marks from someone else -- at least, don't think of it that way. The point of contention is 'is this really a bug?' which is independent of the people involved. Furthermore, the reward for detecting a bug and the penalty for having a bug in your code are calculated independently.

- Having said that, none of us like it when others point out problems of our work. Some of us don't even like pointing out problems of others' work. We just have to learn not to take bug reports personally. Another important lesson is to learn how to report bugs in a way that doesn't feel like you are attacking or trying to sabotage the dev team.

Grading:

- Your performance in the practical exam will affect your final grade and your peers', as explained in Admin: Project Grading section.

- As such, we have put in measures to identify and penalize insincere/random evaluations.

- Also see:

Admin tP Grading → Notes on how marks are calculated for PE

Grading bugs found in the PE

- Of Developer Testing component, based on the bugs found in your code3A and System/Acceptance Testing component, based on the bugs found in others' code3B above, the one you do better will be given a 70% weight and the other a 30% weight so that your total score is driven by your strengths rather than weaknesses.

- Bugs rejected by the dev team, if the rejection is approved by the teaching team, will not affect marks of the tester or the developer.

- The penalty/credit for a bug varies based on the severity of the bug:

severity.High>severity.Medium>severity.Low>severity.VeryLow - The three types (i.e.,

type.FunctionalityBug,type.DocumentationBug,type.FeatureFlaw) are counted for three different grade components. The penalty/credit can vary based on the bug type. Given that you are not told which type has a bigger impact on the grade, always choose the most suitable type for a bug rather than try to choose a type that benefits your grade. - The penalty for a bug is divided equally among assignees.

- Developers are not penalized for duplicate bug reports they received but the testers earn credit for duplicate bug reports they submitted as long as the duplicates are not submitted by the same tester.

- i.e., the same bug reported by many testersObvious bugs earn less credit for the tester and slightly higher penalty for the developer.

- If the team you tested has a low bug count i.e., total bugs found by all testers is low, we will fall back on other means (e.g., performance in PE dry run) to calculate your marks for system/acceptance testing.

- Your marks for developer testing depends on the bug density rather than total bug count. Here's an example:

nbugs found in your feature; it is a big feature consisting of lot of code → 4/5 marksnbugs found in your feature; it is a small feature with a small amount of code → 1/5 marks

- You don't need to find all bugs in the product to get full marks. For example, finding half of the bugs of that product or 4 bugs, whichever the lower, could earn you full marks.

- Excessive incorrect downgrading/rejecting/marking as duplicatesduplicate-flagging, if deemed an attempt to game the system, will be penalized.

PE Preparation

- It's similar to,

PE-D Preparation

-

Ensure that you have accepted the invitation to join the GitHub org used by the module. Go to https://github.com/nus-cs2113-AY2021S2 to accept the invitation.

-

Ensure you have access to a computer that is able to run module projects e.g. has the right Java version.

-

Download the latest CATcher and ensure you can run it on your computer. You should have done this when you smoke-tested CATcher earlier in the week.

If not using CATcher

Issues created for PE-D and PE need to be in a precise format for our grading scripts to work. Incorrectly-formatted responses will have to discarded. Therefore, you are not allowed to use the GitHub interface for PE-D and PE activities, unless you have obtained our permission first.

- Create a public repo in your GitHub account with the following name:

- PE Dry Run:

ped - PE:

pe

- PE Dry Run:

- Enable its issue tracker and add the following labels to it (the label names should be precisely as given).

Bug Severity labels:

severity.VeryLow: A flaw that is purely cosmetic and does not affect usage e.g., a typo/spacing/layout/color/font issues in the docs or the UI that doesn't affect usage.severity.Low: A flaw that is unlikely to affect normal operations of the product. Appears only in very rare situations and causes a minor inconvenience only.severity.Medium: A flaw that causes occasional inconvenience to some users but they can continue to use the product.severity.High: A flaw that affects most users and causes major problems for users. i.e., makes the product almost unusable for most users.

When applying for documentation bugs, replace user with reader.

Type labels:

type.FunctionalityBug: A functionality does not work as specified/expected.type.FeatureFlaw: Some functionality missing from a feature delivered in v2.1 in a way that the feature becomes less useful to the intended target user for normal usage. i.e., the feature is not 'complete'. In other words, an acceptance-testing bug that falls within the scope of v2.1 features. These issues are counted against the product design aspect of the project.type.DocumentationBug: A flaw in the documentation e.g., a missing step, a wrong instruction, typos

-

Have a good screen grab tool with annotation features so that you can quickly take a screenshot of a bug, annotate it, and post in the issue tracker.

- You can use Ctrl+V to paste a picture from the clipboard into a text box in a bug report.

-

Download the product to be tested.

- After you have been notified which team to test (likely to be in the morning of PE-D day), download the jar file from the team's releases page.

- After you have been notified of the download location, download the zip file that bears your name.

Testing tips

Use easy-to-remember patterns in test data. For example, if you use 12345678 as a phone number while testing and it appears as 2345678 somewhere else in the UI, you can easily spot that the first digit has gone missing. But if you used a random number instead, detecting that bug won't be as easy. Similarly, if you use Alice Bee, Benny Lee, Charles Pereira as test data (note how the names start with letters A, B, C), it will be easy to detect if one goes missing, or they appear in the incorrect order.

Go wide before you go deep. Do a light testing of all features first. That will give you a better idea of which features are likely to be more buggy. Spending equal time for all features or testing in the order the features appear in the UG is not always the best approach.

PE Phase 1: Bug Reporting

PE Phase 1 will conducted under exam conditions. We will be following the SoC's E-Exam SOP, combined with the deviations/refinements given below. Any non-compliance will be dealt with similar to a non-compliance in the final exam.

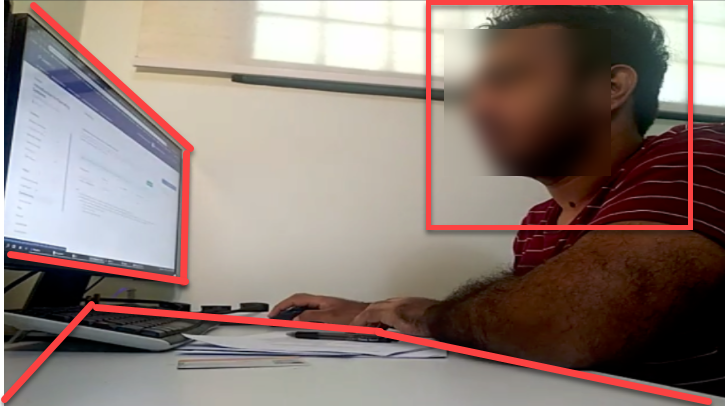

- Proctoring will be done via Zoom. No admission if the following requirements are not met.

- You will be notified of the zoom session that you should log in at least 1 day in advance via LumiNUS. Remember: we will NOT use the same zoom session as the lectures

- You need two Zoom devices (PC: chat, audio

video, Phone: video,audio), unless you have an external web cam for your PC.

- Set your zoom display name to show your actual name as shown on LumiNUS.

- Add

[PC]in front of the first name of your zoom display name on the pc.- E.g.,

John Doe(for the zoom session connected via the phone) [PC] John Doe(for the zoom session on PC)

- E.g.,

- Set your camera so that all the following are visible:

- your head (side view)

- the computer screen

- the work area (i.e., the table top)

- Join the Zoom waiting room 15-30 minutes before the start time. Admitting you to the Zoom session can take some time.

- In case of Zoom outage, we'll fall back on MS Teams (MST). Make sure you have MST running; proctoring will be done via individual tutorial MST teams that we have been using.

- Recording the screen is not required.

- You are allowed to use head/ear phones.

- Only one screen is allowed. If you want to use the secondary monitor, you should switch off the primary monitor. The screen being used should be fully visible in the Zoom camera view.

- Do not use the public chat channel to ask questions from the prof. If you do, you might accidentally reveal which team you are testing.

- Do not use more than one CATcher instance at the same time. Our grading scripts will red-flag you if you use multiple CATcher instances in parallel.

- Use MS Teams (not Zoom) private messages to communicate with the prof. Fall back on Zoom chat only if you didn't receive a reply via MST.

- Do not view video Zoom feeds of others while the testing is ongoing. Keep the video view minimized.

- During the bug reporting periods (i.e., PE Phase 1 - part I and PE Phase 1 - part II), do not use websites/software not in the list given below. In particular, do not visit GitHub. However, you are allowed to visit pages linked in the UG/DG for the purpose of checking if the link is correct. If you need to visit a different website or use another software, please ask for permission first.

- Website: LumiNUS

- Website: Module website (e.g., to look up PE info)

- Software: CATcher, any text editor, any screen grab software

- Software: PDF reader (to read the UG/DG or other references such as the textbook)

- Do not use any other software running in the background e.g., Telegram chat

- This is a manual testing session. Do not use any test automation tools or custom scripts.

- When, where: Week 13 lecture slot. Use the same Zoom link used for the regular lecture.

PE Phase 1 - Part I Product Testing [60 minutes]

Bonus marks for high accuracy rates!

You will receive bonus marks if a high percentage (e.g., >70%) of your bugs are accepted as reported (i.e., the eventual type.* and severity.* of the bug match the values you chose initially and the bug is accepted by the team).

Test the product and report bugs as described below. You may report both product bugs and documentation bugs during this period.

Testing instructions for PE and PE-D

a) Launching the JAR file

- Get the jar file to be tested:

- Download the latest JAR file from the team's releases page, if you haven't done this already.

- Download the zip file from the given location, if you haven't done that already.

- Unzip the downloaded zip file with the password (to be given to you at the start of the PE, via LumiNUS gradebook). This will give you another zip file with the name suffix

_inner.zip. - Unzip the inner zip file. This will give you the jar file and other PDF files needed for the PE. Warning: do not run the jar file while it is still inside the zip file.

- Put the downloaded jar file in an empty folder in which the app is allowed to create files (i.e., do not use a write-protected folder).

- Open a command window. Run the

java -versioncommand to ensure you are using Java 11. - Check the UG to see if there are extra things you need to do before launching the JAR file e.g., download another file from somewhere

You may visit the team's releases page on GitHub if they have provided some extra files you need to download. - Launch the jar file using the

java -jarcommand rather than double-clicking (reason: to ensure the jar file is using the same java version that you verified above). Use double-clicking as a last resort.

If you are on Windows, use the DOS prompt or the PowerShell (not the WSL terminal) to run the JAR file. - If the product doesn't work at all: If the product fails catastrophically e.g., cannot even launch, or even the basic commands crash the app, contact the invigilator (via MS Teams, and failing that, via Zoom chat) to receive a fallback team to test.

b) What to test

- Test the product based on the User Guide available from their GitHub website

https://{team-id}.github.io/tp/UserGuide.html. - Do system testing first i.e., does the product work as specified by the documentation?. If there is time left, you can do acceptance testing as well i.e., does the product solve the problem it claims to solve?.

- Test based on the Developer Guide (Appendix named Instructions for Manual Testing) and the User Guide. The testing instructions in the Developer Guide can provide you some guidance but if you follow those instructions strictly, you are unlikely to find many bugs. You can deviate from the instructions to probe areas that are more likely to have bugs.

- As before, do both system testing and acceptance testing but give priority to system testing as those bugs can earn you more credit.

c) What bugs to report?

- You may report functionality bugs, UG bugs, and feature flaws.

Admin tP Grading → Functionality Bugs

These are considered functionality bugs:

Behavior differs from the User Guide

A legitimate user behavior is not handled e.g. incorrect commands, extra parameters

Behavior is not specified and differs from normal expectations e.g. error message does not match the error

Admin tP Grading → Feature Flaws

These are considered feature flaws:

The feature does not solve the stated problem of the intended user i.e., the feature is 'incomplete'

Hard-to-test features

Features that don't fit well with the product

Features that are not optimized enough for fast-typists or target users

Admin tP Grading → Possible UG Bugs

These are considered UG bugs (if they hinder the reader):

Use of visuals

- Not enough visuals e.g., screenshots/diagrams

- The visuals are not well integrated to the explanation

- The visuals are unnecessarily repetitive e.g., same visual repeated with minor changes

Use of examples:

- Not enough or too many examples e.g., sample inputs/outputs

Explanations:

- The explanation is too brief or unnecessarily long.

- The information is hard to understand for the target audience. e.g., using terms the reader might not know

Neatness/correctness:

- looks messy

- not well-formatted

- broken links, other inaccuracies, typos, etc.

- hard to read/understand

- unnecessary repetitions (i.e., hard to see what's similar and what's different)

- You can also post suggestions on how to improve the product.

Be diplomatic when reporting bugs or suggesting improvements. For example, instead of criticising the current behavior, simply suggest alternatives to consider.

- Report functionality bugs:

Admin tP Grading → Functionality Bugs

These are considered functionality bugs:

Behavior differs from the User Guide

A legitimate user behavior is not handled e.g. incorrect commands, extra parameters

Behavior is not specified and differs from normal expectations e.g. error message does not match the error

- Do not post suggestions but if the product is missing a critical functionality that makes the product less useful to the intended user, it can be reported as a bug of type

Type.FeatureFlaw. The dev team is allowed to reject bug reports framed as mere suggestions or/and lacking in a convincing justification as to why the omission of that functionality is problematic.

Admin tP Grading → Feature Flaws

These are considered feature flaws:

The feature does not solve the stated problem of the intended user i.e., the feature is 'incomplete'

Hard-to-test features

Features that don't fit well with the product

Features that are not optimized enough for fast-typists or target users

- You may also report UG bugs.

Admin tP Grading → Possible UG Bugs

These are considered UG bugs (if they hinder the reader):

Use of visuals

- Not enough visuals e.g., screenshots/diagrams

- The visuals are not well integrated to the explanation

- The visuals are unnecessarily repetitive e.g., same visual repeated with minor changes

Use of examples:

- Not enough or too many examples e.g., sample inputs/outputs

Explanations:

- The explanation is too brief or unnecessarily long.

- The information is hard to understand for the target audience. e.g., using terms the reader might not know

Neatness/correctness:

- looks messy

- not well-formatted

- broken links, other inaccuracies, typos, etc.

- hard to read/understand

- unnecessary repetitions (i.e., hard to see what's similar and what's different)

d) How to report bugs

- Post bugs as you find them (i.e., do not wait to post all bugs at the end) because bug reports created/modified after the allocated time will not count.

- Launch CATcher, and login to the correct profile:

- PE Dry Run:

CS2113/T PE Dry run - PE:

CS2113/T PE

- PE Dry Run:

- Post bugs using CATcher.

Issues created for PE-D and PE need to be in a precise format for our grading scripts to work. Incorrectly-formatted responses will have to discarded. Therefore, you are not allowed to use the GitHub interface for PE-D and PE activities, unless you have obtained our permission first.

- Post bug reports in the following repo you created earlier:

- PE Dry Run:

ped - PE:

pe

- PE Dry Run:

- The whole description of the bug should be in the issue description i.e., do not add comments to the issue.

e) Bug report format

- Each bug should be a separate issue. i.e., do not report multiple problems in the same bug report.

- Write good quality bug reports; poor quality or incorrect bug reports will not earn credit.

- Use a descriptive title.

- Give a good description of the bug with steps to reproduce, expected, actual, and screenshots. If the receiving team cannot reproduce the bug, you will not be able to get credit for it.

- Assign exactly one

severity.*label to the bug report. Bug report without a severity label are consideredseverity.Low(lower severity bugs earn lower credit)

Bug Severity labels:

severity.VeryLow: A flaw that is purely cosmetic and does not affect usage e.g., a typo/spacing/layout/color/font issues in the docs or the UI that doesn't affect usage.severity.Low: A flaw that is unlikely to affect normal operations of the product. Appears only in very rare situations and causes a minor inconvenience only.severity.Medium: A flaw that causes occasional inconvenience to some users but they can continue to use the product.severity.High: A flaw that affects most users and causes major problems for users. i.e., makes the product almost unusable for most users.

When applying for documentation bugs, replace user with reader.

- Assign exactly one

type.*label to the issue.

Type labels:

type.FunctionalityBug: A functionality does not work as specified/expected.type.FeatureFlaw: Some functionality missing from a feature delivered in v2.1 in a way that the feature becomes less useful to the intended target user for normal usage. i.e., the feature is not 'complete'. In other words, an acceptance-testing bug that falls within the scope of v2.1 features. These issues are counted against the product design aspect of the project.type.DocumentationBug: A flaw in the documentation e.g., a missing step, a wrong instruction, typos

PE Phase 1 - Part II Evaluating Documents [30 minutes]

- This slot is for reporting documentation bugs only. You may report bugs related to the UG and the DG.

- For each bug reported, cite evidence and justify. For example, if you think the explanation of a feature is too brief, explain what information is missing and why the omission hinders the reader.

Admin tP Grading → Possible UG Bugs

These are considered UG bugs (if they hinder the reader):

Use of visuals

- Not enough visuals e.g., screenshots/diagrams

- The visuals are not well integrated to the explanation

- The visuals are unnecessarily repetitive e.g., same visual repeated with minor changes

Use of examples:

- Not enough or too many examples e.g., sample inputs/outputs

Explanations:

- The explanation is too brief or unnecessarily long.

- The information is hard to understand for the target audience. e.g., using terms the reader might not know

Neatness/correctness:

- looks messy

- not well-formatted

- broken links, other inaccuracies, typos, etc.

- hard to read/understand

- unnecessary repetitions (i.e., hard to see what's similar and what's different)

Admin tP Grading → Possible DG Bugs

These are considered DG bugs (if they hinder the reader):

Those given as possible UG bugs ...

These are considered UG bugs (if they hinder the reader):

Use of visuals

- Not enough visuals e.g., screenshots/diagrams

- The visuals are not well integrated to the explanation

- The visuals are unnecessarily repetitive e.g., same visual repeated with minor changes

Use of examples:

- Not enough or too many examples e.g., sample inputs/outputs

Explanations:

- The explanation is too brief or unnecessarily long.

- The information is hard to understand for the target audience. e.g., using terms the reader might not know

Neatness/correctness:

- looks messy

- not well-formatted

- broken links, other inaccuracies, typos, etc.

- hard to read/understand

- unnecessary repetitions (i.e., hard to see what's similar and what's different)

UML diagrams:

- Notation incorrect or not compliant with the notation covered in the module.

- Some other type of diagram used when a UML diagram would have worked just as well.

- The diagram used is not suitable for the purpose it is used.

- The diagram is too complicated.

Code snippets:

- Excessive use of code e.g., a large chunk of code is cited when a smaller extract would have sufficed.

Problems in User Stories. Examples:

- Incorrect format

- All three parts are not present

- The three parts do not match with each other

- Important user stories missing

Problems in NFRs. Examples:

- Not really a Non-Functional Requirement

- Not scoped clearly (i.e., hard to decide when it has been met)

- Not reasonably achievable

- Highly relevant NFRs missing

Problems in Glossary. Examples:

- Unnecessary terms included

- Important terms missing

PE Phase 1 - Part III Overall Evaluation [15 minutes]

- To be submitted via TEAMMATES. You are recommended to complete this during the PE session itself, but you have until the end of the day to submit (or revise) your submissions.

Important questions included in the evaluation:

Evaluate based on the User Guide and the actual product behavior.

| Criterion | Unable to judge | Low | Medium | High |

|---|---|---|---|---|

target user |

Not specified | Clearly specified and narrowed down appropriately | ||

value proposition |

Not specified | The value to target user is low. App is not worth using | Some small group of target users might find the app worth using | Most of the target users are likely to find the app worth using |

optimized for target user |

Not enough focus for CLI users | Mostly CLI-based, but cumbersome to use most of the time | Feels like a fast typist can be more productive with the app, compared to an equivalent GUI app without a CLI | |

feature-fit |

Many of the features don't fit with others | Most features fit together but a few may be possible misfits | All features fit together to for a cohesive whole |

Evaluate based on fit-for-purpose, from the perspective of a target user.

For reference, the AB3 UG is here.

Evaluate based on fit-for-purpose from the perspective of a new team member trying to understand the product's internal design by reading the DG.

For reference, the AB3 DG is here.

Consider implementation work only (i.e., exclude testing, documentation, project management etc.)

The typical iP refers to an iP where all the requirements are met at the minimal expectations given.

Use the person's PPP and RepoSense page to evaluate the effort.

PE Phase 2: Developer Response

Deadline: Tue, Apr 20th 2359

This phase is for you to respond to the bug reports you received.

Bonus marks for high accuracy rates!

You will receive bonus marks if a high percentage (e.g., >80%) of bugs are accepted as triaged (i.e., the eventual type.*, severity.*, and response.* of the bug match the ones you chose).

Duration: The review period will start around 1 day after the PE and will last for 2-3 days (exact times will be announced later). However, you are recommended to finish this task ASAP, to minimize cutting into your exam preparation work.

Bug reviewing is recommended to be done as a team as some of the decisions need team consensus.

Instructions for Reviewing Bug Reports

- Don't freak out if there are lot of bug reports. Many can be duplicates and some can be false positives. In any case, we anticipate that all of these products will have some bugs and our penalty for bugs is not harsh. Furthermore, it depends on the severity of the bug. Some bug may not even be penalized.

- The penalty for having a specific bug is not the same as the reward for reporting that bug (it's not a zero-sum game). For example, the reward for testers will be higher (because we don't expect the products to have that many bugs after they have gone through so much prior testing) and penalty for a minor bug (e.g., an indicative value only; the actual value depends on the severity, type, and the number of assignees-0.15) is unlikely to make a difference in your final grade, especially given that the penalty applies only if you have more than a certain amount of bugs.

Accordingly, we hope you'll accept bug reports graciously (rather than fight tooth-and-nail to reject every bug report received) if you think the bug is within the ballpark of 'reasonable'.

- CATcher does not come with a UG, but the UI is fairly intuitive (there are tool tips too). Do post in the forum if you need any guidance with its usage.

- Also note that CATcher hasn't been battle-tested for this phase, in particular, w.r.t. multiple team members editing the same issue concurrently. It is ideal if the team members get together and work through the issues together. If you think others might be editing the same issues at the same time, use the

Syncbutton at the top to force-sync your view with the latest data from GitHub.

CS2113/T PE. It will show all the bugs assigned to your team, divided into three sections:

Issues Pending Responses- Issues that your team has not processed yet.Issues Responded- Your job is to get all issues to this category.Faulty Issues- e.g., Bugs marked as duplicates of each other, or causing circular duplicate relationships. Fix the problem given so that no issues remain in this category.

You must use CATcher. You are strictly prohibited from editing PE bug reports using the GitHub Web interface as it can can render bug reports unprocessable by CATcher, sometimes in an irreversible ways, and can affect the entire class. Please contact the prof if you are unable to use CATcher for some reason.

- If a bug seems to be for a different product (i.e. wrongly assigned to your team), let us know ASAP.

- If the bug is reported multiple times,

- Mark all copies EXCEPT one as duplicates of the one left out (let's call that one the original) using the

A Duplicate oftick box. - For each group of duplicates, all duplicates should point to one original i.e., no multiple levels of duplicates, and no cyclical duplication relationships.

- If the duplication status is eventually accepted, all duplicates will be assumed to have inherited the

type.*andseverity.*from the original.

- Mark all copies EXCEPT one as duplicates of the one left out (let's call that one the original) using the

- Apply one of these labels (if missing, we assign:

response.Accepted)

Response Labels:

response.Accepted: You accept it as a bug.response.NotInScope: It is a valid issue but not something the team should be penalized for e.g., it was not related to features delivered in v2.1.response.Rejected: What tester treated as a bug is in fact the expected behavior, or the tester was mistaken in some other way.response.CannotReproduce: You are unable to reproduce the behavior reported in the bug after multiple tries.response.IssueUnclear: The issue description is not clear. Don't post comments asking the tester to give more info. The tester will not be able to see those comments because the bug reports are anonymous.

- Apply one of these labels (if missing, we assign:

type.FunctionalityBug)

Type labels:

type.FunctionalityBug: A functionality does not work as specified/expected.type.FeatureFlaw: Some functionality missing from a feature delivered in v2.1 in a way that the feature becomes less useful to the intended target user for normal usage. i.e., the feature is not 'complete'. In other words, an acceptance-testing bug that falls within the scope of v2.1 features. These issues are counted against the product design aspect of the project.type.DocumentationBug: A flaw in the documentation e.g., a missing step, a wrong instruction, typos

- If you disagree with the original severity assigned to the bug, you may change it to the correct level.

Bug Severity labels:

severity.VeryLow: A flaw that is purely cosmetic and does not affect usage e.g., a typo/spacing/layout/color/font issues in the docs or the UI that doesn't affect usage.severity.Low: A flaw that is unlikely to affect normal operations of the product. Appears only in very rare situations and causes a minor inconvenience only.severity.Medium: A flaw that causes occasional inconvenience to some users but they can continue to use the product.severity.High: A flaw that affects most users and causes major problems for users. i.e., makes the product almost unusable for most users.

When applying for documentation bugs, replace user with reader.

- If you need the teaching team's inputs when deciding on a bug (e.g., if you are not sure if the UML notation is correct), post in the forum. Remember to quote the issue number shown in CATcher (it appears at the end of the issue title).

Some additional guidelines for bug triaging:

- Broken links in UG/DG: Severity can be low or medium depending on how many such cases and how much inconvenience they cause to the reader.

- UML notation variations caused by the diagramming tool: Can be rejected if not contradicting the standard notation (as given by the textbook) i.e., extra decorations that are not misleading.

Omitting optional notations is not a bug as long it doesn't hinder understanding. - What bugs can be considered duplicates? It is up to the dev team to prove conclusively that a bug is a duplicate. If the proof is not convincing enough, they will be considered as 'not duplicates'. Only the following cases can be considered duplicates:

- The exact same bug reported multiple times.

- Multiple buggy behaviors that are actually caused by the same defect and cannot be fixed independently.

- In diagrams, same error appearing multiple times in the same diagram (e.g., the same notation mistake appearing several times in the same diagram). However, errors across multiple diagrams should not be flagged as duplicates, unless it is the same diagram appearing in multiple places (i.e., the same image file used in multiple places).

- Minor typos and grammar errors: These are still considered as

severity.VeryLowtype.DocumentationBugbugs (even if it is in the actual UI) which carry a very tiny penalty. - How to prove that something is 'not in scope': In general, features not-yet-implemented (and hence, not in scope) are the features that have a lower priority than the ones that have been implemented already. In addition, the following (at least one) can be used to prove that a feature was left out deliberately because it was not in scope:

- The UG specifies it as not supported or coming in a future version.

- The user cannot attempt to use the missing feature or when the user does so, the software fails gracefully, possibly with a suitable error message i.e., the software should not crash.

- If a missing feature is essential for the app to be reasonably useful, its omission can be considered a feature flaw even if it can be proven as not in scope as given in the previous point.

- If a bug report contains multiple bugs (i.e., despite instructions to the contrary, a tester included multiple bugs in a single bug report), you have to choose one bug and ignore the others. If there are valid bugs, choose from valid bugs. Among the choices available, choose the one with the highest severity (in your opinion). In your response, mention which bug you chose.

- How to decide the severity of bugs related to missing requirements (e.g., missing user stories)? Depends on the potential damage the omission can cause. Keep in mind that not documenting a requirement increases the risk of it not getting implemented in a timely manner (i.e., future developers will not know that feature needs to be implemented).

You can reject bugs that you i.e., the current behavior is same as AB3 and you had no reason to change it because the feature applies similarly to your new productinherited from AB3.Even bugs inherited from AB3 are counted.

- Decide who should take responsibility for the bug. Use the

Assigneesfield to assign the issue to that person(s). There is no need to actually fix the bug though. It's simply an indication/acceptance of responsibility. If there is no assignee, we will distribute the penalty for that bug (if any) among all team members.- If it is not easy to decide the assignee(s), we recommend (but not enforce) that the feature owner should be assigned bugs related to the feature, Reason: The feature owner should have defended the feature against bugs using automated tests and defensive coding techniques.

-

As far as possible, choose the correct

type.*,severity.*,response.*, assignees, and duplicate status even for bugs you are not accepting or marking as duplicates. Reason: your non-acceptance or duplication status may be rejected in a later phase, in which case we need to grade it as an accepted/non-duplicate bug. -

Justify your response. For all of the following cases, you must add a comment justifying your stance. Testers will get to respond to all those cases and will be double-checked by the teaching team in later phases.

- downgrading severity

- non-acceptance of a bug

- changing the bug type

- non-obvious duplicate

- You can also refer to the below guidelines:

Admin tP Grading → Grading bugs found in the PE

Grading bugs found in the PE

- Of Developer Testing component, based on the bugs found in your code3A and System/Acceptance Testing component, based on the bugs found in others' code3B above, the one you do better will be given a 70% weight and the other a 30% weight so that your total score is driven by your strengths rather than weaknesses.

- Bugs rejected by the dev team, if the rejection is approved by the teaching team, will not affect marks of the tester or the developer.

- The penalty/credit for a bug varies based on the severity of the bug:

severity.High>severity.Medium>severity.Low>severity.VeryLow - The three types (i.e.,

type.FunctionalityBug,type.DocumentationBug,type.FeatureFlaw) are counted for three different grade components. The penalty/credit can vary based on the bug type. Given that you are not told which type has a bigger impact on the grade, always choose the most suitable type for a bug rather than try to choose a type that benefits your grade. - The penalty for a bug is divided equally among assignees.

- Developers are not penalized for duplicate bug reports they received but the testers earn credit for duplicate bug reports they submitted as long as the duplicates are not submitted by the same tester.

- i.e., the same bug reported by many testersObvious bugs earn less credit for the tester and slightly higher penalty for the developer.

- If the team you tested has a low bug count i.e., total bugs found by all testers is low, we will fall back on other means (e.g., performance in PE dry run) to calculate your marks for system/acceptance testing.

- Your marks for developer testing depends on the bug density rather than total bug count. Here's an example:

nbugs found in your feature; it is a big feature consisting of lot of code → 4/5 marksnbugs found in your feature; it is a small feature with a small amount of code → 1/5 marks

- You don't need to find all bugs in the product to get full marks. For example, finding half of the bugs of that product or 4 bugs, whichever the lower, could earn you full marks.

- Excessive incorrect downgrading/rejecting/marking as duplicatesduplicate-flagging, if deemed an attempt to game the system, will be penalized.

PE Phase 3: Tester Response

Start: Within 1 day after Phase 2 ends.

While you are waiting for Phase 3 to start, comments will be added to the bug reports in your /pe repo, to indicate the response each received from the receiving team. Please do not edit any of those comments or reply to them via the GitHub interface. Doing so can invalidate them, in which case the grading script will assume that you agree with the dev team's response. Instead, wait till the start of the Phase 3 is announced, after which you should use CATcher to respond.

Deadline: Fri, Apr 23rd 2359

- In this phase you will get to state whether you agree or disagree with the dev team's response to the bugs you reported. If a bug reported has been subjected to any of the below by the receiving dev team, you can record your objections and the reason for the objection.

- not accepted

- severity downgraded

- bug type changed

- bug flagged as duplicate (Note that you still get credit for bugs flagged as duplicates, unless you reported both bugs yourself. Nevertheless, it is in your interest to object to bugs being flagged incorrectly as duplicates because when a bug is reported by more testers, it will be considered an 'obvious' bug and will earn slightly less credit than otherwise)

- If you disagree with the team's decision but would like to revise your own initial type/severity/response as well, you can state that in your explanation e.g., you rated the bug

severity.Highand the team changed it toseverity.Lowbut now you think it should beseverity.Medium. - You can also refer to the below guidelines:

Admin PE → Phase 2 → Additional Guidelines for Bug Triaging

Some additional guidelines for bug triaging:

- Broken links in UG/DG: Severity can be low or medium depending on how many such cases and how much inconvenience they cause to the reader.

- UML notation variations caused by the diagramming tool: Can be rejected if not contradicting the standard notation (as given by the textbook) i.e., extra decorations that are not misleading.

Omitting optional notations is not a bug as long it doesn't hinder understanding. - What bugs can be considered duplicates? It is up to the dev team to prove conclusively that a bug is a duplicate. If the proof is not convincing enough, they will be considered as 'not duplicates'. Only the following cases can be considered duplicates:

- The exact same bug reported multiple times.

- Multiple buggy behaviors that are actually caused by the same defect and cannot be fixed independently.

- In diagrams, same error appearing multiple times in the same diagram (e.g., the same notation mistake appearing several times in the same diagram). However, errors across multiple diagrams should not be flagged as duplicates, unless it is the same diagram appearing in multiple places (i.e., the same image file used in multiple places).

- Minor typos and grammar errors: These are still considered as

severity.VeryLowtype.DocumentationBugbugs (even if it is in the actual UI) which carry a very tiny penalty. - How to prove that something is 'not in scope': In general, features not-yet-implemented (and hence, not in scope) are the features that have a lower priority than the ones that have been implemented already. In addition, the following (at least one) can be used to prove that a feature was left out deliberately because it was not in scope:

- The UG specifies it as not supported or coming in a future version.

- The user cannot attempt to use the missing feature or when the user does so, the software fails gracefully, possibly with a suitable error message i.e., the software should not crash.

- If a missing feature is essential for the app to be reasonably useful, its omission can be considered a feature flaw even if it can be proven as not in scope as given in the previous point.

- If a bug report contains multiple bugs (i.e., despite instructions to the contrary, a tester included multiple bugs in a single bug report), you have to choose one bug and ignore the others. If there are valid bugs, choose from valid bugs. Among the choices available, choose the one with the highest severity (in your opinion). In your response, mention which bug you chose.

- How to decide the severity of bugs related to missing requirements (e.g., missing user stories)? Depends on the potential damage the omission can cause. Keep in mind that not documenting a requirement increases the risk of it not getting implemented in a timely manner (i.e., future developers will not know that feature needs to be implemented).

You can reject bugs that you i.e., the current behavior is same as AB3 and you had no reason to change it because the feature applies similarly to your new productinherited from AB3.Even bugs inherited from AB3 are counted.

Admin tP Grading → Grading bugs found in the PE

Grading bugs found in the PE

- Of Developer Testing component, based on the bugs found in your code3A and System/Acceptance Testing component, based on the bugs found in others' code3B above, the one you do better will be given a 70% weight and the other a 30% weight so that your total score is driven by your strengths rather than weaknesses.

- Bugs rejected by the dev team, if the rejection is approved by the teaching team, will not affect marks of the tester or the developer.

- The penalty/credit for a bug varies based on the severity of the bug:

severity.High>severity.Medium>severity.Low>severity.VeryLow - The three types (i.e.,

type.FunctionalityBug,type.DocumentationBug,type.FeatureFlaw) are counted for three different grade components. The penalty/credit can vary based on the bug type. Given that you are not told which type has a bigger impact on the grade, always choose the most suitable type for a bug rather than try to choose a type that benefits your grade. - The penalty for a bug is divided equally among assignees.

- Developers are not penalized for duplicate bug reports they received but the testers earn credit for duplicate bug reports they submitted as long as the duplicates are not submitted by the same tester.

- i.e., the same bug reported by many testersObvious bugs earn less credit for the tester and slightly higher penalty for the developer.

- If the team you tested has a low bug count i.e., total bugs found by all testers is low, we will fall back on other means (e.g., performance in PE dry run) to calculate your marks for system/acceptance testing.

- Your marks for developer testing depends on the bug density rather than total bug count. Here's an example:

nbugs found in your feature; it is a big feature consisting of lot of code → 4/5 marksnbugs found in your feature; it is a small feature with a small amount of code → 1/5 marks

- You don't need to find all bugs in the product to get full marks. For example, finding half of the bugs of that product or 4 bugs, whichever the lower, could earn you full marks.

- Excessive incorrect downgrading/rejecting/marking as duplicatesduplicate-flagging, if deemed an attempt to game the system, will be penalized.

- If you do not respond to a dev response, we'll assume that you agree with it.

- Procedure:

- When the phase has been announced as open, login to CATcher as usual (profile:

CS2113/T PE).

You may use the latest version of CATcher or the Web version of CATcher. - For each issues listed in the

Issues Pending Responsessection:,- Click on it to go to the details, and read the dev team's response.

- If you disagree with any of the items listed, tick on the

I disagreetick box and enter your justification for the disagreement, and clickSave. - If you are fine with the team's changes, click

Savewithout any other changes upon which the issue will move to theIssue Respondedsection.

- Note that only bugs that require your response will be shown by CATcher. Bugs already accepted as reported by the team will not appear in CATcher as there is nothing for you to do about them.

You must use CATcher. You are strictly prohibited from editing PE bug reports using the GitHub Web interface as it can can render bug reports unprocessable by CATcher, sometimes in an irreversible ways, and can affect the entire class. Please contact the prof if you are unable to use CATcher for some reason.

PE Phase 4: Tutor Moderation

- In this phase tutors will look through all dev responses you objected to in the previous phase and decide on a final outcome.

- In the unlikely case we need your inputs, a tutor will contact you.

Grading: The PE dry run affects your grade in the following ways.

- If you scored less than half of the marks in the PE, we will consider your performance in PE dry run as well when calculating the PE marks.

- PE dry run is a way for you to practice for the actual PE.

- Taking part in the PE dry run will earn you participation points.

- There is no penalty for bugs reported in your product. Every bug you find is a win-win for you and the team whose product you are testing.

Why:

- To train you to do manual testing, bug reporting, bug assigning of priority ordertriaging, bug fixing, communicating with users/testers/developers, evaluating products etc.

- To help you improve your product before the final submission.

PE-D Preparation

-

Ensure that you have accepted the invitation to join the GitHub org used by the module. Go to https://github.com/nus-cs2113-AY2021S2 to accept the invitation.

-

Ensure you have access to a computer that is able to run module projects e.g. has the right Java version.

-

Download the latest CATcher and ensure you can run it on your computer. You should have done this when you smoke-tested CATcher earlier in the week.

If not using CATcher

Issues created for PE-D and PE need to be in a precise format for our grading scripts to work. Incorrectly-formatted responses will have to discarded. Therefore, you are not allowed to use the GitHub interface for PE-D and PE activities, unless you have obtained our permission first.

- Create a public repo in your GitHub account with the following name:

- PE Dry Run:

ped - PE:

pe

- PE Dry Run:

- Enable its issue tracker and add the following labels to it (the label names should be precisely as given).

Bug Severity labels:

severity.VeryLow: A flaw that is purely cosmetic and does not affect usage e.g., a typo/spacing/layout/color/font issues in the docs or the UI that doesn't affect usage.severity.Low: A flaw that is unlikely to affect normal operations of the product. Appears only in very rare situations and causes a minor inconvenience only.severity.Medium: A flaw that causes occasional inconvenience to some users but they can continue to use the product.severity.High: A flaw that affects most users and causes major problems for users. i.e., makes the product almost unusable for most users.

When applying for documentation bugs, replace user with reader.

Type labels:

type.FunctionalityBug: A functionality does not work as specified/expected.type.FeatureFlaw: Some functionality missing from a feature delivered in v2.1 in a way that the feature becomes less useful to the intended target user for normal usage. i.e., the feature is not 'complete'. In other words, an acceptance-testing bug that falls within the scope of v2.1 features. These issues are counted against the product design aspect of the project.type.DocumentationBug: A flaw in the documentation e.g., a missing step, a wrong instruction, typos

-

Have a good screen grab tool with annotation features so that you can quickly take a screenshot of a bug, annotate it, and post in the issue tracker.

- You can use Ctrl+V to paste a picture from the clipboard into a text box in a bug report.

-

Download the product to be tested.

- After you have been notified which team to test (likely to be in the morning of PE-D day), download the jar file from the team's releases page.

- After you have been notified of the download location, download the zip file that bears your name.

Testing tips

Use easy-to-remember patterns in test data. For example, if you use 12345678 as a phone number while testing and it appears as 2345678 somewhere else in the UI, you can easily spot that the first digit has gone missing. But if you used a random number instead, detecting that bug won't be as easy. Similarly, if you use Alice Bee, Benny Lee, Charles Pereira as test data (note how the names start with letters A, B, C), it will be easy to detect if one goes missing, or they appear in the incorrect order.

Go wide before you go deep. Do a light testing of all features first. That will give you a better idea of which features are likely to be more buggy. Spending equal time for all features or testing in the order the features appear in the UG is not always the best approach.

PE-D During the session

Use MS Teams (not Zoom) to contact prof if you need help during the session. Use Zoom chat only if you don't get a response via MS Teams.

How many bugs to report?

Report as many bugs as you can find during the given time. Take longer if you need. If you can't find many bugs at this stage when the product is largely untested, you are unlikely to be able to find enough bugs in the better-tested final submission later. In that case, all the more reasons to spend more time and find more bugs now.

Bug reports marked as invalid by the receiving team later will not count for credit.

The median number of bugs reported in the previous semester's PED was 9. Someone reporting just a 2-3 bugs is usually a sign of a half-hearted attempt rather than lack of bugs to find. If you really can't find bugs, at least submit suggestions for improvements.

PE and PE-D are manual testing sessions. Using test automation tools or scripting is not allowed.

Test the product and report bugs as described below, when the prof informs you to begin testing.

Testing instructions for PE and PE-D

a) Launching the JAR file

- Get the jar file to be tested:

- Download the latest JAR file from the team's releases page, if you haven't done this already.

- Download the zip file from the given location, if you haven't done that already.

- Unzip the downloaded zip file with the password (to be given to you at the start of the PE, via LumiNUS gradebook). This will give you another zip file with the name suffix

_inner.zip. - Unzip the inner zip file. This will give you the jar file and other PDF files needed for the PE. Warning: do not run the jar file while it is still inside the zip file.

- Put the downloaded jar file in an empty folder in which the app is allowed to create files (i.e., do not use a write-protected folder).

- Open a command window. Run the

java -versioncommand to ensure you are using Java 11. - Check the UG to see if there are extra things you need to do before launching the JAR file e.g., download another file from somewhere

You may visit the team's releases page on GitHub if they have provided some extra files you need to download. - Launch the jar file using the

java -jarcommand rather than double-clicking (reason: to ensure the jar file is using the same java version that you verified above). Use double-clicking as a last resort.

If you are on Windows, use the DOS prompt or the PowerShell (not the WSL terminal) to run the JAR file. - If the product doesn't work at all: If the product fails catastrophically e.g., cannot even launch, or even the basic commands crash the app, contact the invigilator (via MS Teams, and failing that, via Zoom chat) to receive a fallback team to test.

b) What to test

- Test the product based on the User Guide available from their GitHub website

https://{team-id}.github.io/tp/UserGuide.html. - Do system testing first i.e., does the product work as specified by the documentation?. If there is time left, you can do acceptance testing as well i.e., does the product solve the problem it claims to solve?.

- Test based on the Developer Guide (Appendix named Instructions for Manual Testing) and the User Guide. The testing instructions in the Developer Guide can provide you some guidance but if you follow those instructions strictly, you are unlikely to find many bugs. You can deviate from the instructions to probe areas that are more likely to have bugs.

- As before, do both system testing and acceptance testing but give priority to system testing as those bugs can earn you more credit.

c) What bugs to report?

- You may report functionality bugs, UG bugs, and feature flaws.

Admin tP Grading → Functionality Bugs

These are considered functionality bugs:

Behavior differs from the User Guide

A legitimate user behavior is not handled e.g. incorrect commands, extra parameters

Behavior is not specified and differs from normal expectations e.g. error message does not match the error

Admin tP Grading → Feature Flaws

These are considered feature flaws:

The feature does not solve the stated problem of the intended user i.e., the feature is 'incomplete'

Hard-to-test features

Features that don't fit well with the product

Features that are not optimized enough for fast-typists or target users

Admin tP Grading → Possible UG Bugs

These are considered UG bugs (if they hinder the reader):

Use of visuals

- Not enough visuals e.g., screenshots/diagrams

- The visuals are not well integrated to the explanation

- The visuals are unnecessarily repetitive e.g., same visual repeated with minor changes

Use of examples:

- Not enough or too many examples e.g., sample inputs/outputs

Explanations:

- The explanation is too brief or unnecessarily long.

- The information is hard to understand for the target audience. e.g., using terms the reader might not know

Neatness/correctness:

- looks messy

- not well-formatted

- broken links, other inaccuracies, typos, etc.

- hard to read/understand

- unnecessary repetitions (i.e., hard to see what's similar and what's different)

- You can also post suggestions on how to improve the product.

Be diplomatic when reporting bugs or suggesting improvements. For example, instead of criticising the current behavior, simply suggest alternatives to consider.

- Report functionality bugs:

Admin tP Grading → Functionality Bugs

These are considered functionality bugs:

Behavior differs from the User Guide

A legitimate user behavior is not handled e.g. incorrect commands, extra parameters

Behavior is not specified and differs from normal expectations e.g. error message does not match the error

- Do not post suggestions but if the product is missing a critical functionality that makes the product less useful to the intended user, it can be reported as a bug of type

Type.FeatureFlaw. The dev team is allowed to reject bug reports framed as mere suggestions or/and lacking in a convincing justification as to why the omission of that functionality is problematic.

Admin tP Grading → Feature Flaws

These are considered feature flaws:

The feature does not solve the stated problem of the intended user i.e., the feature is 'incomplete'

Hard-to-test features

Features that don't fit well with the product

Features that are not optimized enough for fast-typists or target users

- You may also report UG bugs.

Admin tP Grading → Possible UG Bugs

These are considered UG bugs (if they hinder the reader):

Use of visuals

- Not enough visuals e.g., screenshots/diagrams

- The visuals are not well integrated to the explanation

- The visuals are unnecessarily repetitive e.g., same visual repeated with minor changes

Use of examples:

- Not enough or too many examples e.g., sample inputs/outputs

Explanations:

- The explanation is too brief or unnecessarily long.

- The information is hard to understand for the target audience. e.g., using terms the reader might not know

Neatness/correctness:

- looks messy

- not well-formatted

- broken links, other inaccuracies, typos, etc.

- hard to read/understand

- unnecessary repetitions (i.e., hard to see what's similar and what's different)

d) How to report bugs

- Post bugs as you find them (i.e., do not wait to post all bugs at the end) because bug reports created/modified after the allocated time will not count.

- Launch CATcher, and login to the correct profile:

- PE Dry Run:

CS2113/T PE Dry run - PE:

CS2113/T PE

- PE Dry Run:

- Post bugs using CATcher.

Issues created for PE-D and PE need to be in a precise format for our grading scripts to work. Incorrectly-formatted responses will have to discarded. Therefore, you are not allowed to use the GitHub interface for PE-D and PE activities, unless you have obtained our permission first.

- Post bug reports in the following repo you created earlier:

- PE Dry Run:

ped - PE:

pe

- PE Dry Run:

- The whole description of the bug should be in the issue description i.e., do not add comments to the issue.

e) Bug report format

- Each bug should be a separate issue. i.e., do not report multiple problems in the same bug report.

- Write good quality bug reports; poor quality or incorrect bug reports will not earn credit.

- Use a descriptive title.

- Give a good description of the bug with steps to reproduce, expected, actual, and screenshots. If the receiving team cannot reproduce the bug, you will not be able to get credit for it.

- Assign exactly one

severity.*label to the bug report. Bug report without a severity label are consideredseverity.Low(lower severity bugs earn lower credit)

Bug Severity labels:

severity.VeryLow: A flaw that is purely cosmetic and does not affect usage e.g., a typo/spacing/layout/color/font issues in the docs or the UI that doesn't affect usage.severity.Low: A flaw that is unlikely to affect normal operations of the product. Appears only in very rare situations and causes a minor inconvenience only.severity.Medium: A flaw that causes occasional inconvenience to some users but they can continue to use the product.severity.High: A flaw that affects most users and causes major problems for users. i.e., makes the product almost unusable for most users.

When applying for documentation bugs, replace user with reader.

- Assign exactly one

type.*label to the issue.

Type labels:

type.FunctionalityBug: A functionality does not work as specified/expected.type.FeatureFlaw: Some functionality missing from a feature delivered in v2.1 in a way that the feature becomes less useful to the intended target user for normal usage. i.e., the feature is not 'complete'. In other words, an acceptance-testing bug that falls within the scope of v2.1 features. These issues are counted against the product design aspect of the project.type.DocumentationBug: A flaw in the documentation e.g., a missing step, a wrong instruction, typos

At the end of the project each student is required to submit a Project Portfolio Page.

PPP Objectives

- For you to use (e.g. in your resume) as a well-documented data point of your SE experience

- For evaluators to use as a data point for evaluating your project contributions

PPP Sections to include

- Overview: A short overview of your product to provide some context to the reader. The opening 1-2 sentences may be reused by all team members. If your product overview extends beyond 1-2 sentences, the remainder should be written by yourself.

- Summary of Contributions --Suggested items to include:

- Code contributed: Give a link to your code on tP Code Dashboard. The link is available in the Project List Page -- linked to the icon under your profile picture.

- Enhancements implemented: A summary of the enhancements you implemented.

- Contributions to documentation: Which sections did you contribute to the UG?

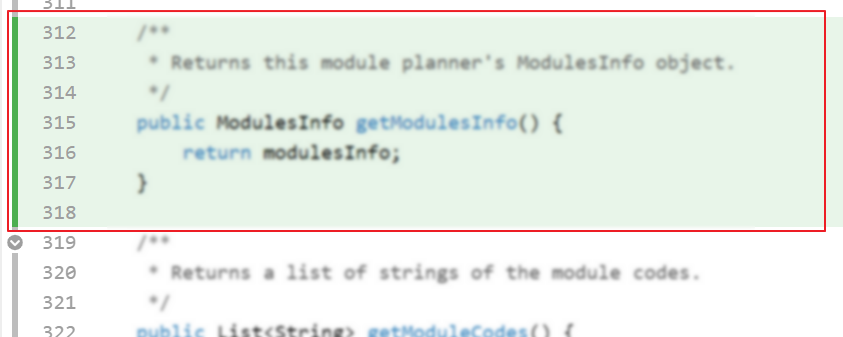

- Contributions to the DG: Which sections did you contribute to the DG? Which UML diagrams did you add/updated?

- Contributions to

team-based tasks : - Review/mentoring contributions: Links to PRs reviewed, instances of helping team members in other ways

- Contributions beyond the project team:

- Evidence of helping others e.g. responses you posted in our forum, bugs you reported in other team's products,

- Evidence of technical leadership e.g. sharing useful information in the forum

Team-tasks are the tasks that someone in the team has to do.

Examples of team-tasks

Here is a non-exhaustive list of team-tasks:

- Setting up the GitHub team org/repo

- Necessary general code enhancements

- Setting up tools e.g., GitHub, Gradle

- Maintaining the issue tracker

- Release management

- Updating user/developer docs that are not specific to a feature e.g. documenting the target user profile

- Incorporating more useful tools/libraries/frameworks into the product or the project workflow (e.g. automate more aspects of the project workflow using a GitHub plugin)

Keep in mind that evaluators will use the PPP to estimate your project effort. We recommend that you mention things that will earn you a fair score e.g., explain how deep the enhancement is, why it is complete, how hard it was to implement etc..

- OPTIONAL Contributions to the Developer Guide (Extracts): Reproduce the parts in the Developer Guide that you wrote. Alternatively, you can show the various diagrams you contributed.

- OPTIONAL Contributions to the User Guide (Extracts): Reproduce the parts in the User Guide that you wrote.

PPP Format

- File name (i.e., in the repo):

docs/team/githbub_username_in_lower_case.mde.g.,docs/team/goodcoder123.md - Follow the example in the AddressBook-Level3

To convert the UG/DG/PPP into PDF format, go to the generated page in your project's github.io site and use this technique to save as a pdf file. Using other techniques can result in poor quality resolution (will be considered a bug) and unnecessarily large files.

Ensure hyperlinks in the pdf files work. Your UG/DG/PPP will be evaluated using PDF files during the PE. Broken/non-working hyperlinks in the PDF files will be considered as bugs and will count against your project score. Again, use the conversion technique given above to ensure links in the PDF files work.

Try the PDF conversion early. If you do it at the last minute, you may not have time to fix any problems in the generated PDF files (such problems are more common than you think).

PPP Page Limit

| Content | Recommended | Hard Limit |

|---|---|---|

| Overview + Summary of contributions | 0.5-1 | 2 |

| [Optional] Contributions to the User Guide | 1 | |

| [Optional] Contributions to the Developer Guide | 3 |

- The page limits given above are after converting to PDF format. The actual amount of content you require is actually less than what these numbers suggest because the HTML → PDF conversion adds a lot of spacing around content.

PE-D After the session

- The relevant bug reports will be transferred to your issue tracker within a day after the session is over. Once you have received the bug reports for your product, you can decide whether you will act on reported issues before the final submission v2.1. For some issues, the correct decision could be to reject or postpone to a version beyond v2.1.

- If you have received stray bug reports (i.e., bug reports that don't seem to be about your project), do let us know ASAP (email the prof).

- You can navigate to the original bug report (via the back-link provided in the bug report given to you) and post in that issue thread to communicate with the tester who reported the bug e.g. to ask for more info, etc. However, the tester is not obliged to respond. Note that simply replying to the bug report in your own repo will not notify the tester.

- Do not argue with the tester to try to convince that person that your way is correct/better. If at all, you can gently explain the rationale for the current behavior but do not waste time getting involved in long arguments. If you think the suggestion/bug is unreasonable, just thank the tester for their view and discontinue to discussion.

- If a bug report received is not useful, add the

invalidtag to it (add that tag if it doesn't exist in your issue tracker). We will not count such bugs when giving credit to testers.

Note that listing bugs as 'known bugs' in the UG or adding unreasonable constraints to the UG to make bugs 'out of scope' will not exempt those bugs from the final grading. That is, PE testers can still earn credit for reporting those bugs and you will still be penalized for them.

However, a product is allowed to have 'known limitations' (e.g., a daily expense tracking application meant for students is unable to handle expenses larger than $999) as long as they don't degrade the product's use within the intended scope. They will not be penalized.

2 Tweak the product as per peer-testing results

- Follow the procedure for dealing with PED bugs you received:

Admin tP → Deliverables → After the PED

PE-D After the session

- The relevant bug reports will be transferred to your issue tracker within a day after the session is over. Once you have received the bug reports for your product, you can decide whether you will act on reported issues before the final submission v2.1. For some issues, the correct decision could be to reject or postpone to a version beyond v2.1.

- If you have received stray bug reports (i.e., bug reports that don't seem to be about your project), do let us know ASAP (email the prof).

- You can navigate to the original bug report (via the back-link provided in the bug report given to you) and post in that issue thread to communicate with the tester who reported the bug e.g. to ask for more info, etc. However, the tester is not obliged to respond. Note that simply replying to the bug report in your own repo will not notify the tester.

- Do not argue with the tester to try to convince that person that your way is correct/better. If at all, you can gently explain the rationale for the current behavior but do not waste time getting involved in long arguments. If you think the suggestion/bug is unreasonable, just thank the tester for their view and discontinue to discussion.

- If a bug report received is not useful, add the

invalidtag to it (add that tag if it doesn't exist in your issue tracker). We will not count such bugs when giving credit to testers.

Note that listing bugs as 'known bugs' in the UG or adding unreasonable constraints to the UG to make bugs 'out of scope' will not exempt those bugs from the final grading. That is, PE testers can still earn credit for reporting those bugs and you will still be penalized for them.

However, a product is allowed to have 'known limitations' (e.g., a daily expense tracking application meant for students is unable to handle expenses larger than $999) as long as they don't degrade the product's use within the intended scope. They will not be penalized.

- Freeze features. As mentioned earlier, you are strongly discouraged from adding new features in this iteration. The remaining time is to be spent fixing problems discovered late and wrapping up the final release.

- Update documentation to match the product.

- Consider increasing test coverage by adding more tests if it is lower than the level you would like it to be. Take note of our expectation on test code (given in the panel below).

Admin tP → Grading → Expectation on testing

- Expectation Write some automated tests so that we can evaluate your ability to write tests.

🤔 How much testings is enough? We expect you to decide. You learned different types of testing and what they try to achieve. Based on that, you should decide how much of each type is required. Similarly, you can decide to what extent you want to automate tests, depending on the benefits and the effort required.

There is no requirement for a minimum coverage level. Note that in a production environment you are often required to have at least 90% of the code covered by tests. In this project, it can be less. The weaker your tests are, the higher the risk of bugs, which will cost marks if not fixed before the final submission.

- After you have sufficient code coverage, fix remaining code quality problems and bring up the quality to your target level.

Admin tP → Grading → Code Quality Tips

-

At least some evidence of these (see here for more info)

- logging

- exceptions

- assertions

-

No coding standard violations e.g. all boolean variables/methods sounds like booleans. Checkstyle can prevent only some coding standard violations; others need to be checked manually.

-

SLAP is applied at a reasonable level. Long methods or deeply-nested code are symptoms of low-SLAP.

-

No noticeable code duplications i.e. if there multiple blocks of code that vary only in minor ways, try to extract out similarities into one place, especially in test code.

-

Evidence of applying code quality guidelines covered in the module.

3 Draft the PPP

- Create the first version of your Project Portfolio Page (PPP).

Reason: Each member needs to create a PPP to describe your contribution to the project.

Admin tP → Deliverables → Project Portfolio Page

At the end of the project each student is required to submit a Project Portfolio Page.

PPP Objectives

- For you to use (e.g. in your resume) as a well-documented data point of your SE experience

- For evaluators to use as a data point for evaluating your project contributions

PPP Sections to include

- Overview: A short overview of your product to provide some context to the reader. The opening 1-2 sentences may be reused by all team members. If your product overview extends beyond 1-2 sentences, the remainder should be written by yourself.

- Summary of Contributions --Suggested items to include:

- Code contributed: Give a link to your code on tP Code Dashboard. The link is available in the Project List Page -- linked to the icon under your profile picture.

- Enhancements implemented: A summary of the enhancements you implemented.

- Contributions to documentation: Which sections did you contribute to the UG?

- Contributions to the DG: Which sections did you contribute to the DG? Which UML diagrams did you add/updated?

- Contributions to

team-based tasks : - Review/mentoring contributions: Links to PRs reviewed, instances of helping team members in other ways

- Contributions beyond the project team:

- Evidence of helping others e.g. responses you posted in our forum, bugs you reported in other team's products,

- Evidence of technical leadership e.g. sharing useful information in the forum

Team-tasks are the tasks that someone in the team has to do.

Examples of team-tasks

Here is a non-exhaustive list of team-tasks:

- Setting up the GitHub team org/repo

- Necessary general code enhancements

- Setting up tools e.g., GitHub, Gradle

- Maintaining the issue tracker

- Release management

- Updating user/developer docs that are not specific to a feature e.g. documenting the target user profile

- Incorporating more useful tools/libraries/frameworks into the product or the project workflow (e.g. automate more aspects of the project workflow using a GitHub plugin)

Keep in mind that evaluators will use the PPP to estimate your project effort. We recommend that you mention things that will earn you a fair score e.g., explain how deep the enhancement is, why it is complete, how hard it was to implement etc..

- OPTIONAL Contributions to the Developer Guide (Extracts): Reproduce the parts in the Developer Guide that you wrote. Alternatively, you can show the various diagrams you contributed.

- OPTIONAL Contributions to the User Guide (Extracts): Reproduce the parts in the User Guide that you wrote.

PPP Format

- File name (i.e., in the repo):

docs/team/githbub_username_in_lower_case.mde.g.,docs/team/goodcoder123.md - Follow the example in the AddressBook-Level3

To convert the UG/DG/PPP into PDF format, go to the generated page in your project's github.io site and use this technique to save as a pdf file. Using other techniques can result in poor quality resolution (will be considered a bug) and unnecessarily large files.

Ensure hyperlinks in the pdf files work. Your UG/DG/PPP will be evaluated using PDF files during the PE. Broken/non-working hyperlinks in the PDF files will be considered as bugs and will count against your project score. Again, use the conversion technique given above to ensure links in the PDF files work.

Try the PDF conversion early. If you do it at the last minute, you may not have time to fix any problems in the generated PDF files (such problems are more common than you think).

PPP Page Limit

| Content | Recommended | Hard Limit |

|---|---|---|

| Overview + Summary of contributions | 0.5-1 | 2 |

| [Optional] Contributions to the User Guide | 1 | |

| [Optional] Contributions to the Developer Guide | 3 |

- The page limits given above are after converting to PDF format. The actual amount of content you require is actually less than what these numbers suggest because the HTML → PDF conversion adds a lot of spacing around content.

4 Double-check RepoSense compatibility

- Once again, double-check to ensure the code attributed to you by RepoSense is correct.

Admin tP → mid-v2.1 → Making the Code RepoSense-Compatible

-

Ensure your code is i.e., RepoSense can detect your code as yoursRepoSense-compatible and the code it attributes to you is indeed the code written by you, as explained below:

- Go to the tp Code Dashboard. Click on the

</>icon against your name and verify that the lines attributed to you (i.e., lines marked as green) reflects your code contribution correctly. This is important because some aspects of your project grade (e.g., code quality) will be graded based on those lines.

- More info on how to make the code RepoSense compatible:

- Go to the tp Code Dashboard. Click on the